I think it’s fair to say that the AI revolution has caught a lot of us off guard, with a spate of recent developments putting AI at the forefront of everybody’s mind whether they like it or not… but one Japanese invention could take things to a whole new level…

We’re not exactly lacking in stories about AI this year. With the seemingly unstoppable rise of ChatGPT, image-generator Midjourney causing all kinds of Catholic chaos, and the apparently imminent threat of across-the-board redundancies as we’re all finally replaced by robots…

On top of that, this month has seen tech founders from around the world, including Tesla and Twitter boss Elon Musk along with Apple co-founder Steve Wozniak, write an open letter asking the world to pause development on AI due to the “profound risks to society and humanity” that it poses.

But the march of progress shows no signs of slowing. This week, Japanese scientists have unveiled a startling new development in the world of AI: a technology for reading your mind.

WATCH: Elon Musk predicts Twitter difficulties and blame-game.

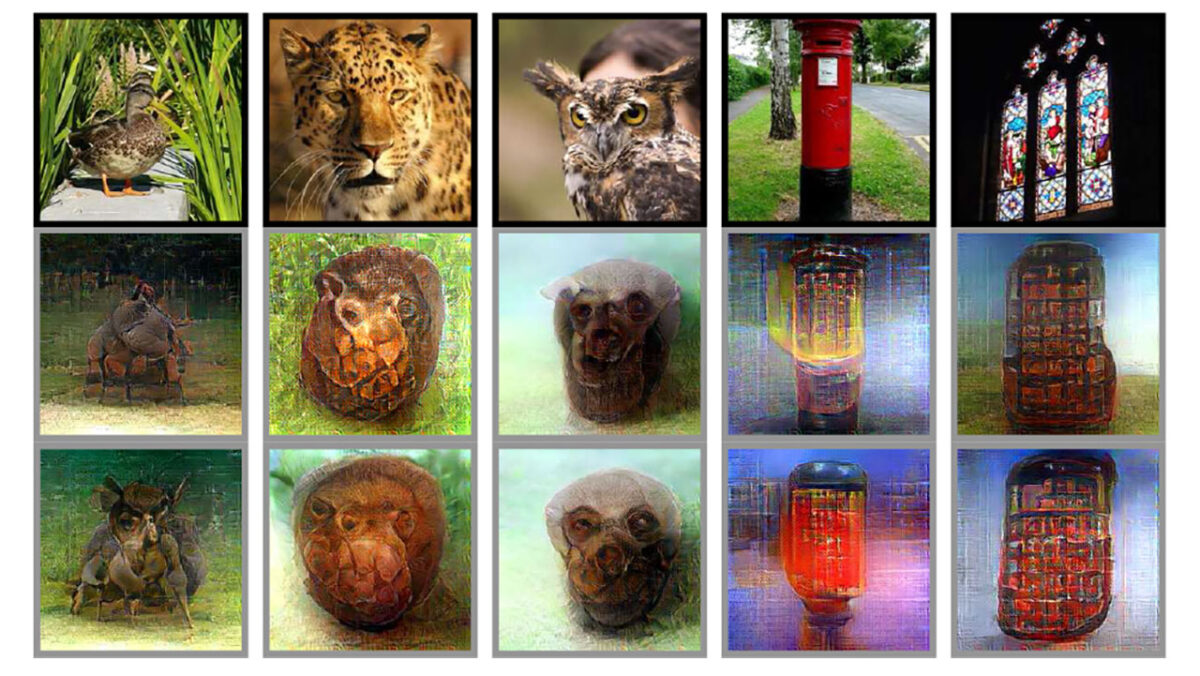

Before anyone gets carried away though, allow us to specify: the process is known as Stable Diffusion (SD) and has been used to decode human brain activity to create high-fidelity images of what test subjects were asked to look at on a screen.

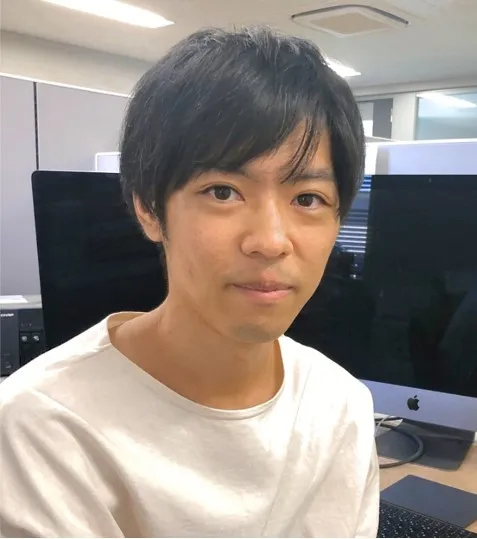

In other words, neuroscientist Yu Takagi and his research partner Shinji Nishimoto, have developed a model to “translate” brain activity into a readable format. Takagi, a 34-year-old neuroscientist and assistant professor at Osaka University told Al Jazeera that it was a surreal experience:

“I still remember when I saw the first images… I went into the bathroom and looked at myself in the mirror and saw my face, and thought, ‘Okay, that’s normal. Maybe I’m not going crazy’”.

Yu Takagi

Stable Diffusion was able to produce images that bore an almost identical resemblance to the original images shown to trial participants, despite not being shown the pictures in advance or being trained to manufacture such results.

The study has been accepted to the Conference on Computer Vision and Pattern Recognition (CVPR) which, the scientists behind the technology hope, will be a fast-track to legitimising the process and rolling out expanded trails.

However, though some are hailing this as a breakthrough, others have been quick to raise concerns about the possibility of misuse by those with malicious intent, and this will likely be a central talking point at the aforementioned conference.

Though Takagi is keen to press on with development, claiming that he is “now developing a much better [image] reconstructing technique at a very rapid pace,” he was quick to clarify a couple of things. First, that the technology can’t technically read your mind… yet: “We can’t decode imaginations or dreams, but there is potential in the future.”

Second, that privacy is a top priority for him: “Privacy issues are the most important thing. If a government or institution can read people’s minds, it’s a very sensitive issue… there need to be high-level discussions to make sure this can’t happen.”

AI sceptics will also be pleased to hear that Takagi and Nishimoto are cautious not to get carried away with their findings. They maintain that there are two pretty significant bottlenecks to genuine mind-reading AI: brain-scanning technology and AI itself.

Despite advancements in neural interfaces, scientists reckon we could be decades away from accurately and reliably decoding imagined visual experiences. For Takagi and Nishimoto’s research, subjects had to sit in an fMRI scanner for up to 40 hours at a time – a costly and time-consuming exercise.

Furthermore, despite AI’s rapid development in recent months, current limitations present a second speedbump: the method used in this trial can’t be transferred to “novel subjects” because the shape of the brain differs between individuals and the model created for one person cannot be applied to another.

Though privacy concerns will be a worry for many, it’s important to note that when this technology does reach its full potential, it could have a positive and paradigm-shifting impact on many things including, but not limited to, the medical, communication, and entertainment industries.

Ricardo Silva, a professor of computational neuroscience at University College London and research fellow at the Alan Turing Institute, shared one specific example: “This may turn out to be one extra way of developing a marker for Alzheimer’s detection and progression evaluation”.

Ultimately, this is just one development out of scores of upcoming advancements to the world of AI but – like so many of them – it’ll be a good while before they become part and parcel of our everyday lives. So you can put away those tinfoil hats… for now.